100 arquivos alterados com 25449 adições e 0 exclusões

+ 168

- 0

src/detection/CenterPoint-master/.clang-format

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 10

- 0

src/detection/CenterPoint-master/.gitignore

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 104

- 0

src/detection/CenterPoint-master/CMakeLists.txt

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 170

- 0

src/detection/CenterPoint-master/CenterPoint.pro

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 21

- 0

src/detection/CenterPoint-master/LICENSE

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 199

- 0

src/detection/CenterPoint-master/README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

src/detection/CenterPoint-master/build-qt/CenterPoint

BIN

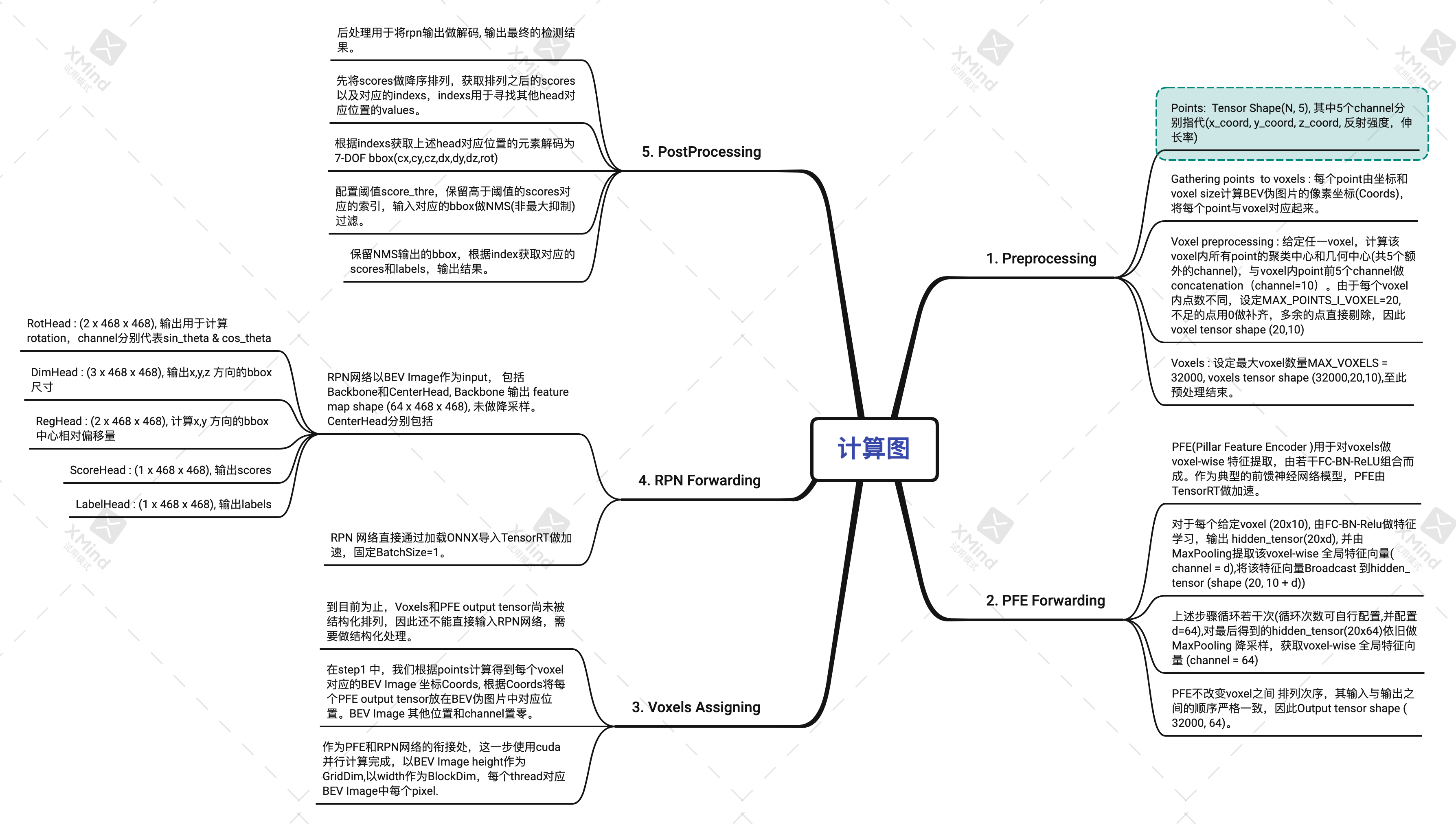

src/detection/CenterPoint-master/doc/computation_graph.png

BIN

src/detection/CenterPoint-master/doc/entropy_track.gif

BIN

src/detection/CenterPoint-master/doc/explicit_track.gif

BIN

src/detection/CenterPoint-master/doc/fp_det.gif

BIN

src/detection/CenterPoint-master/doc/fp_track.gif

+ 1

- 0

src/detection/CenterPoint-master/google912b2d6b509a62f4.html

|

||

|

||

+ 539

- 0

src/detection/CenterPoint-master/include/BatchStream.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 149

- 0

src/detection/CenterPoint-master/include/EntropyCalibrator.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 223

- 0

src/detection/CenterPoint-master/include/centerpoint.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 147

- 0

src/detection/CenterPoint-master/include/common/ErrorRecorder.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 217

- 0

src/detection/CenterPoint-master/include/common/argsParser.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 467

- 0

src/detection/CenterPoint-master/include/common/buffers.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 971

- 0

src/detection/CenterPoint-master/include/common/common.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 116

- 0

src/detection/CenterPoint-master/include/common/dumpTFWts.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 247

- 0

src/detection/CenterPoint-master/include/common/getOptions.cpp

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 127

- 0

src/detection/CenterPoint-master/include/common/getOptions.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 4302

- 0

src/detection/CenterPoint-master/include/common/half.h

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||